Mandelbrot: Introduction and definitions

Introduction

When I first started learning Python somewhere in 2013, I tried my hand at visualizing the Mandelbrot fractal: A simple mathematic formula with inifinite complexity, that can create the most wondrous of images. Somewhere past year, I decided to pick up on this toy-project again.

At first just for fun; but later I set the goal to create my own ‘benchmark’ of the difference in performance and coding-experience between Python and C++ while we are at it, actively programming in both on a day-to-day basis (in time I might add javascript/typescript to this experiment too).

You (very) roughly encounter the following opinions:

- Execution time: “Python is slow, C++ is fast”

- Developing experience:

- “Python is easy and fast to develop, C++ is hard and slow”

- “Interpreted Python is bug-prone; typed and compiled C++ is the way”

Ofcourse, we could continue this and get stuck in a “Interpreted vs Compiled” discussion; and I won’t: Compiled will typically win when purely considering performance, while the developing experience is as heavily opiniated as it is dependent on the use-case and experience of the developer. In the end, the answer always remains: ‘It depends’. My personal goal here is just to first-hand create a direct comparison on these topics, and between these languages.

Performance-wise: It is very easy to write a slow Python program, while a smart (C++) compiler will provide you with many of-the-shelf optimisations (altough it also still is pretty easy to write a slow C++ program). However, I have always developed my Python codes with performance in mind, and when people say that Python is slow: Often it really is the implementation that is (really) slow.

Actual bad implementations aside, Python and it’s ecosystem is king in interfacing with other languages and using their strengths, while retaining it’s own. Think Numpy, Pandas (or Polars) and Numba (which even compiles your Python/Numpy code to execute as C/C++), among many others. Powerful tools harnessing the power of C/C++/Rust, in these cases, with the interpreted scripting and fast prototyping convenience of Python.

This about concludes my motivation of this personal project, and why I let it grow to something worth writing about. Without further ado: let’s introduce the Mandelbrot set.

The Mandelbrot Set

The mandelbrot set’s equation is simple:

Here, is an imaginary number, and so will be .

The set of numbers for which this series does not diverge to infinity as is called the Mandelbrot set. We can do as many iterations as we like, will always be bounded if is in the set.

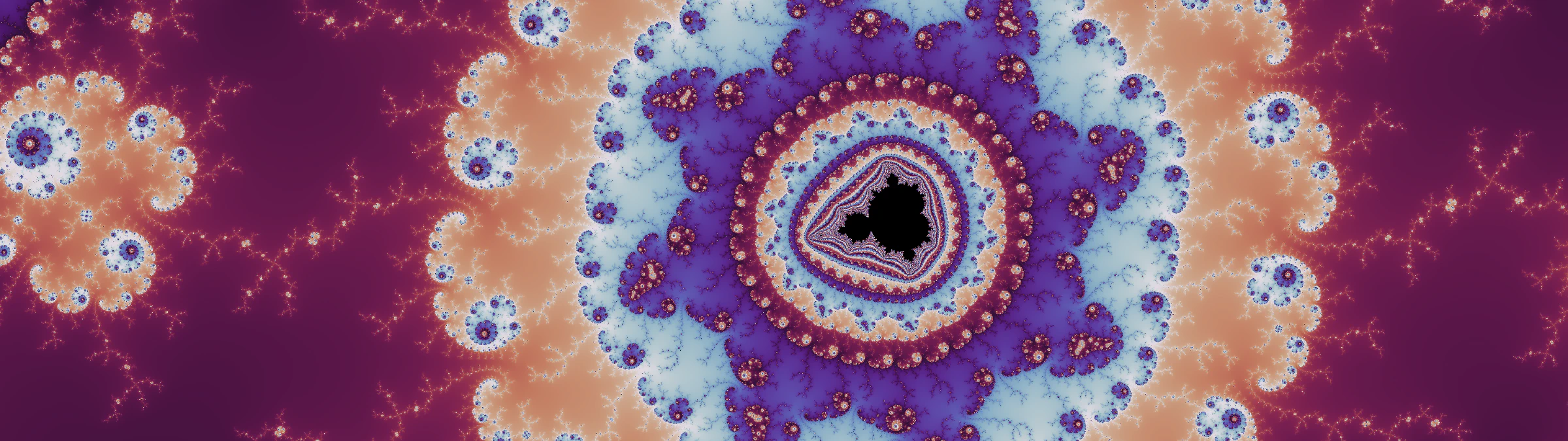

To create images, the real and imaginary axis of is mapped to a pixel x-y location. Pixels correspond with values of that are in the set are typically colored black, found ‘on the inside’ of the characteristic shape.

Ploting the Mandelbrot equation

In practice, to create our colorfull images, we will calculate times. However, if we do this naïvely we arrive at a binary answer (“in the set” or “not in the set”), and create black and white images. Not very appealing.

Instead, a mathematical threshold exists after which we are certain the series will diverge. Using this, we might conclude at any iteration that will diverge for this value of , and stop iterating. This measure of how fast diverges can be all integer values in . Apply this to a colormap, and we have a colored image! This threshold is called the escape radius.

Escape radius

The escape radius is called as such because it is related to the magnitude of , which in the imaginary plane is a radius. After this radius, your equation will escape, like a photon passes the event horizon of a black hole.

The escape radius for the Mandelbrot Set’s equation is 2. This might seems as a random number but makes sense if you see the math. A clear proof, that actually shows where the ‘2’ comes from, and not just shows it works for that number, is given on this website, which I will quote here verbatim:

|

So, if then we are certain that is not in the set, and we stop iterating. Note that 2 is a required minimum, if you want you can pick a higher number.

We normally calculate and compare as: However, since we want to avoid taking the computationally heavy square root, we instead typically do: Since this number 4 is not strictly a radius anymore, it is often referred to as the ‘bailout’.

(Smooth) Coloring

The simplest way to color the Mandelbrot equation is to take the iteration count, apply them to a colormap (or anything that maps a scalar to a color), do this for every pixel linked to a , et voilá. Ideally, take a continous and cyclic colormap (starts and ends with the same color), and also make the iteration count loop (i.e. n % 255).

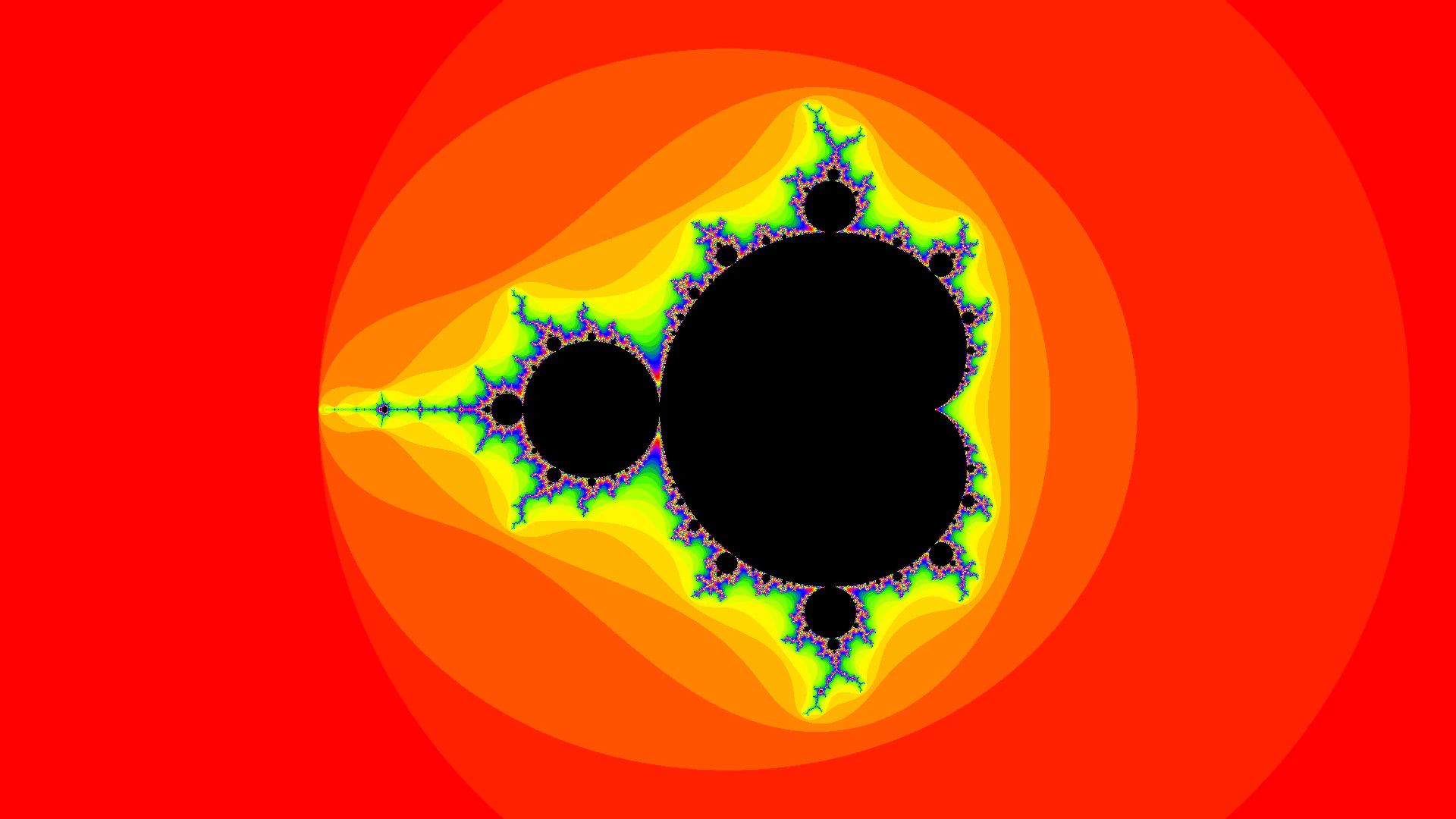

However, since each iteration number is an integer, this will result in ‘bands’ of color, see Figure 1a.

Instead, we would like to transform our iteration count to a float representation, using a measure of how close we were to the escape radius when we exceeded it. Mathematicians came up with the following formula:

When programming this, we can simplify this a bit for efficiency:

| |

log_zn can be calculated as it is because the magnitude of an imaginary number simply follows Pythagoras’ theorem, after which we apply the power rule of logarithms:

Unfortunately, I am not enough of a mathematician to understand, let alone explain the math behind this equation, which actually turns out to be complicated. The DIY linearized bounds argument of this blogpost, might give a first feeling on the subject. However, the ‘simplified explanation’ on this webpage provides insight into the actual formula:

|

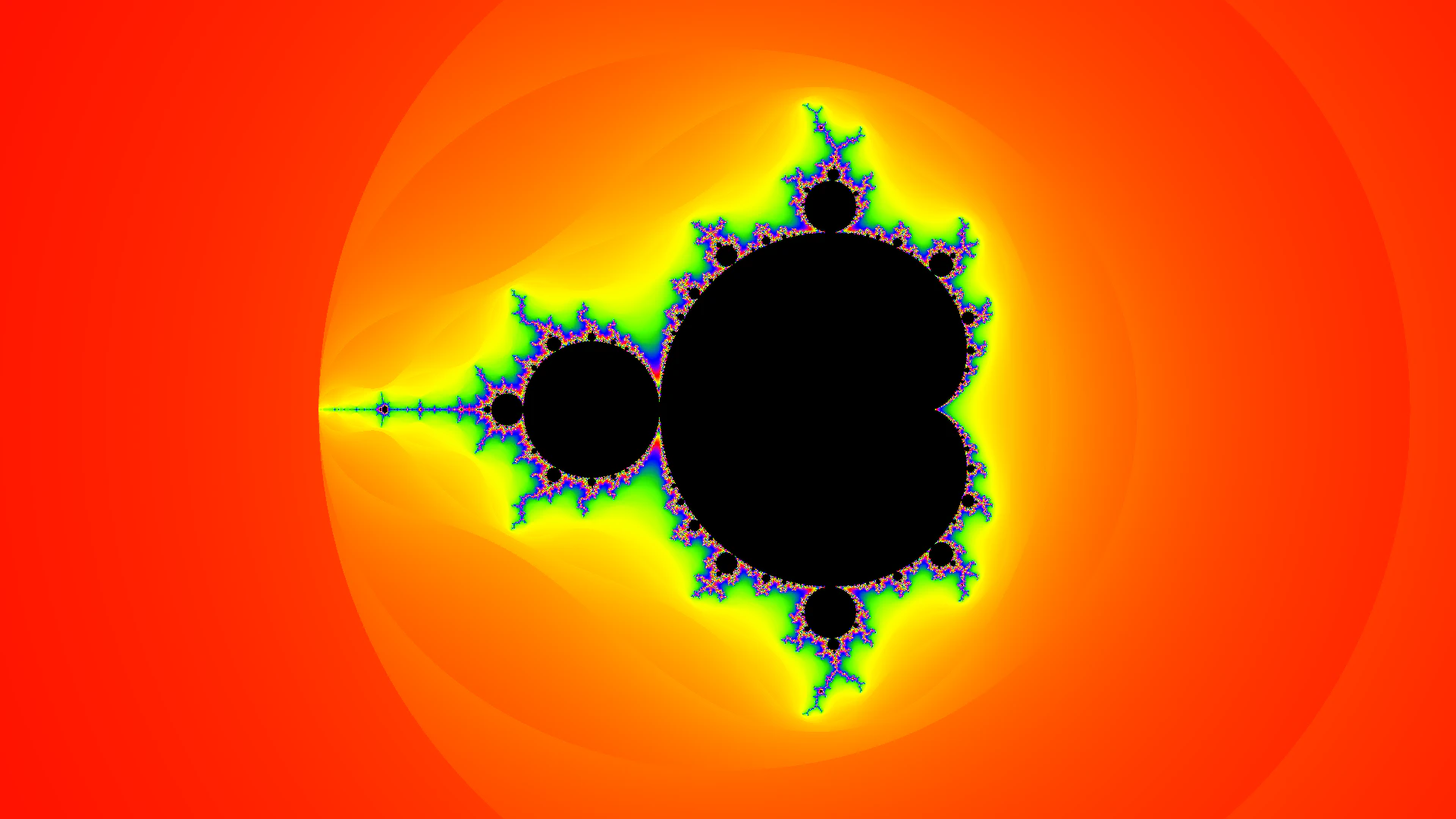

As a result, Figure 1 shows the Mandelbrot set plotted using unsmoothed integer (a) and smoothed fractional coloring (b). The colormap is Matplotlib’s ‘prism’, which is a bit too intense to be pretty, but illustrative.