(Multi-fidelity) Bayesian Optimisation

(Multi-fidelity) Efficient Global optimisation

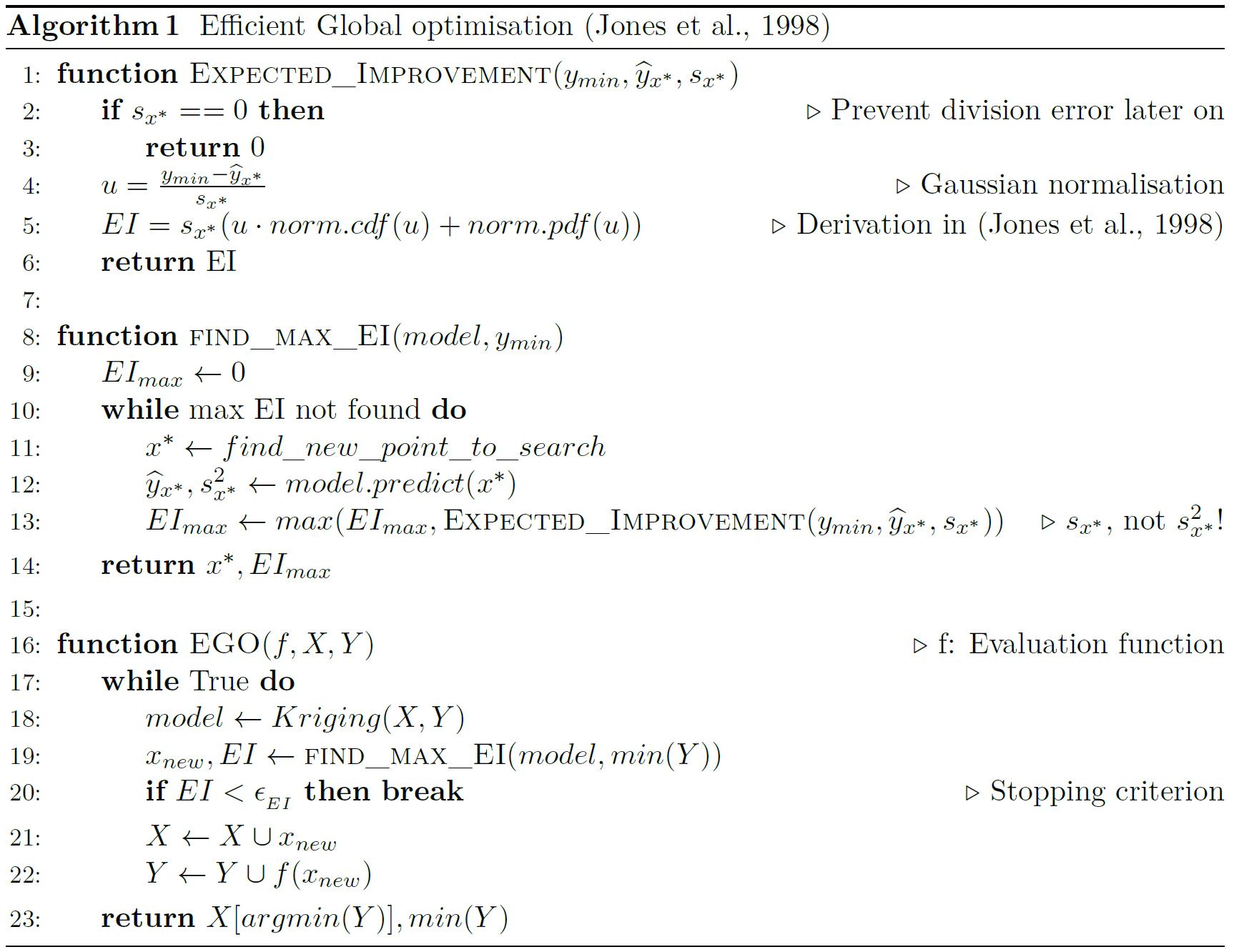

The Efficient Global optimisation (EGO) algorithm (Citation: Jones, Schonlau & al., 1998 Jones, D., Schonlau, M. & Welch, W. (1998). Efficient Global Optimization of Expensive Black-Box Functions. Journal of Global Optimization, 13(4). 455–492. https://doi.org/10.1023/A:1008306431147 ) is a form of Bayesian optimisation. The EGO algorithm adaptively samples additional points based on the Expected Improvement criterion (Citation: Jones, Schonlau & al., 1998 Jones, D., Schonlau, M. & Welch, W. (1998). Efficient Global Optimization of Expensive Black-Box Functions. Journal of Global Optimization, 13(4). 455–492. https://doi.org/10.1023/A:1008306431147 ), which is formulated using the estimated mean squared error that Kriging provides. Using this criterion, EGO provably converges to the global optimum in an optimisation process (Citation: Locatelli, 1997 Locatelli, M. (1997). Bayesian Algorithms for One-Dimensional Global Optimization. Journal of Global Optimization, 10(1). 57–76. https://doi.org/10.1023/A:1008294716304 ), although it may exhaustively sample around local optima (Citation: Forrester, Sóbester & al., 2007 Forrester, A., Sóbester, A. & Keane, A. (2007). Multi-fidelity optimization via surrogate modelling. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences, 463(2088). 3251–3269. https://doi.org/10.1098/rspa.2007.1900 ). An overview of the algorithmic procedure is given by Algorithm 1.

Expected improvement

The expected improvement is the expectation of those parts of the normally distributed variable of the Kriging estimator that retrieves a better result than the current best objective value :

Using integration by parts we retrieve the formulation of Citation: Jones, Schonlau & al. (1998) Jones, D., Schonlau, M. & Welch, W. (1998). Efficient Global Optimization of Expensive Black-Box Functions. Journal of Global Optimization, 13(4). 455–492. https://doi.org/10.1023/A:1008306431147 :

Here and are the standard normal Gaussian cumulative distribution function and probability density function respectively. A practical implementation can be found in Algorithm 1.

In this post we saw that unless the variance at sampled points is not zero. In such a scenario, the expected improvement is non-zero too and the EGO algorithm might re-sample previously sampled locations. Since we assess deterministic computer experiments, this would provide us with no extra information. Therefore, if we want to use a regressing Kriging model in combination with the EGO algorithm we should perform re-interpolation (Citation: Forrester, Keane & al., 2006 Forrester, A., Keane, A. & Bressloff, N. (2006). Design and analysis of “noisy” computer experiments. AIAA Journal, 44(10). 2331–2339. https://doi.org/10.2514/1.20068 ), which is informally but equivalently performed as:

- Build a regressing Kriging model based on the noisy but deterministic observations

- Build an interpolating Kriging model using the predictions of the regressing Kriging model

- Variance and Expected Improvement is 0 at sampled points again

A benchmark of Kriging-based infill criteria for noisy optimisation is found in Citation: Picheny, Wagner & al. (2013) Picheny, V., Wagner, T. & Ginsbourger, D. (2013). A benchmark of kriging-based infill criteria for noisy optimization. Structural and Multidisciplinary Optimization, 48(3). 607–626. https://doi.org/10.1007/s00158-013-0919-4 . The expected improvement criterion combined with re-interpolation (Citation: Forrester, Keane & al., 2006 Forrester, A., Keane, A. & Bressloff, N. (2006). Design and analysis of “noisy” computer experiments. AIAA Journal, 44(10). 2331–2339. https://doi.org/10.2514/1.20068 ) turns out to be the best option for deterministic computer experiments.

Infill search routine

Searching the response surface for the maximum Expected Improvement is an auxiliary optimisation problem involved with the Efficient Global optimisation routine. This routine is not often described extensively since the Kriging prediction function and thereby the expected improvement are very cheap to evaluate when compared to for instance the hyperparameter tuning process.

Citation: Jones (2001) Jones, R. (2001). A Taxonomy of Global Optimization Methods Based on Response Surfaces. Journal of Global Optimization, 21. 345–383. uses a multi-start hillclimbing algorithm with each starting point between a neighbouring pair of sample locations. This is a simple and effective strategy for single-objective design problems. During my thesis, I used a similar approach where the starting points are determined by an LHS DoE with a sample size of 40.

Multi-fidelity: Level selection strategy

To extend the EGO algorithm to use a multi-fidelity surrogate and thereby improve the performance of SBGO, we should additionally provide a way to decide which fidelity level we should sample once we have selected a location by means of the expected improvement criterion. Otherwise, we will still be exhaustively sampling the high-fidelity, which we aimed to avoid by using a multi-fidelity approach. Citation: Meliani, Bartoli & al. (2019) Meliani, M., Bartoli, N., Lefebvre, T., Bouhlel, M., Martins, J. & Morlier, J. (2019). Multi-fidelity efficient global optimization: Methodology and application to airfoil shape design. AIAA Aviation 2019 Forum. https://doi.org/10.2514/6.2019-3236 proposes such a methodology using a fully nested DoE, which means all lower fidelities are sampled whenever and where a higher fidelity is sampled.

First, the expected variance reduction of sampling a level in a nested fashion is given:

Here is the prediction variance of level i, or the last term of . The term can similarly be identified there. The cost of sampling a level is defined by:

Lastly, we find the level we want to sample using:

Citation: Korondi, Marchi & al. (2021) Korondi, P., Marchi, M., Parussini, L. & Poloni, C. (2021). Multi-fidelity design optimisation strategy under uncertainty with limited computational budget. Optimization and Engineering, 22(2). 1039–1064. https://doi.org/10.1007/s11081-020-09510-1 adopts a similar approach for non-nested DoE’s that reduces to the formulation of Citation: Meliani, Bartoli & al. (2019) Meliani, M., Bartoli, N., Lefebvre, T., Bouhlel, M., Martins, J. & Morlier, J. (2019). Multi-fidelity efficient global optimization: Methodology and application to airfoil shape design. AIAA Aviation 2019 Forum. https://doi.org/10.2514/6.2019-3236 for nested DoE’s.

Bibliography

- Forrester, Keane & Bressloff (2006)

- Forrester, A., Keane, A. & Bressloff, N. (2006). Design and analysis of “noisy” computer experiments. AIAA Journal, 44(10). 2331–2339. https://doi.org/10.2514/1.20068

- Forrester, Sóbester & Keane (2007)

- Forrester, A., Sóbester, A. & Keane, A. (2007). Multi-fidelity optimization via surrogate modelling. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences, 463(2088). 3251–3269. https://doi.org/10.1098/rspa.2007.1900

- Jones, Schonlau & Welch (1998)

- Jones, D., Schonlau, M. & Welch, W. (1998). Efficient Global Optimization of Expensive Black-Box Functions. Journal of Global Optimization, 13(4). 455–492. https://doi.org/10.1023/A:1008306431147

- Jones (2001)

- Jones, R. (2001). A Taxonomy of Global Optimization Methods Based on Response Surfaces. Journal of Global Optimization, 21. 345–383.

- Korondi, Marchi, Parussini & Poloni (2021)

- Korondi, P., Marchi, M., Parussini, L. & Poloni, C. (2021). Multi-fidelity design optimisation strategy under uncertainty with limited computational budget. Optimization and Engineering, 22(2). 1039–1064. https://doi.org/10.1007/s11081-020-09510-1

- Locatelli (1997)

- Locatelli, M. (1997). Bayesian Algorithms for One-Dimensional Global Optimization. Journal of Global Optimization, 10(1). 57–76. https://doi.org/10.1023/A:1008294716304

- Meliani, Bartoli, Lefebvre, Bouhlel, Martins & Morlier (2019)

- Meliani, M., Bartoli, N., Lefebvre, T., Bouhlel, M., Martins, J. & Morlier, J. (2019). Multi-fidelity efficient global optimization: Methodology and application to airfoil shape design. AIAA Aviation 2019 Forum. https://doi.org/10.2514/6.2019-3236

- Picheny, Wagner & Ginsbourger (2013)

- Picheny, V., Wagner, T. & Ginsbourger, D. (2013). A benchmark of kriging-based infill criteria for noisy optimization. Structural and Multidisciplinary Optimization, 48(3). 607–626. https://doi.org/10.1007/s00158-013-0919-4